1 Introduction

Over the last two decades, the variable selection problem has been the center of attention in many research areas due to the surge of high-dimensional data resulting from advances in modern technologies. A recent study in epigenetics by The Cancer Genome Atlas network on the relationship between the survival time of ovarian cancer patients and their dna methylation profile of genomic features, for instance, has a complex structure with 396 observations and at least 9,000 covariates. Approximately 28% of the data are right censored and there are some signs of heterogeneity. These data motivated the development of new methodologies for capturing possible heterogeneity while accounting for right censoring in the finite mixture of accelerated failure time regression (fmaftr) models [29]. Because of the novelty of this research, there was no software package to be used in such situations. Thus, the fmrs package aims to provide a tool for variable selection and estimation in fmaftr. As a byproduct, variable selection in the finite mixture of regression (fmr) models [24] can be carried out using this package. This is because the likelihood of fmr is proportional to that of fmaftr when all observations are actually failure times. For ease of reference, we use the term “fmrs” to refer to both fmaftr and fmr hereafter, hence the name of the fmrs package.

There are several packages that focus on estimation and inference in finite mixture models. The mixtools package [2] provides a collection of R functions for fitting univariate and multivariate finite mixture models, primarily focusing on two-component mixture models without covariates. It covers parametric, nonparametric, and Bayesian approaches in mixture models. The EMMIXuskew package [21] estimates the parameters of finite mixtures of unrestricted multivariate skew-t distributions. The mixsmsn package [26] estimates the parameters of finite mixture models with components belonging to the class of scale mixtures of the skew-Normal distribution. The FlexMix package [13] performs parametric inference in finite mixture models, including concomitant variable models and varying and constant parameters for the component-specific generalized linear regression models. The CAMAN package [28] focuses on the analysis of finite semiparametric mixtures. The CensMix package [27] employs parameter estimation in censored linear regression models, where random errors follow a finite mixture of Normal or Student-t distributions.

For Bayesian approaches to fitting mixture models, there are several packages available, such as BayesMixSurv [23], BayesH [30], BayesCR [11], Ultimixt [18], and CUB [15], among others.

Some packages focusing on model-based clustering, unsupervised, supervised, and semi-supervised classification include mclust [10], GMCM [5], and Rmixmod [20]. The GLDEX package [32] considers fitting the mixture of generalized Lambda distributions. The MitISEM package [1] analyzes data assuming a mixture of Student-t distributions using the Importance Sampling weighted expectation–maximization (EM) algorithm. The MixGHD [33] deals with the mixture of generalized Hyperbolic distributions, providing results for model-based clustering, classification, and discriminant analysis. When dealing with missing values, the MixAll package [16] offers algorithms and methods for fitting parametric mixture models to mixed data. The SMNCensReg package [12] implements right, left, or interval-censored regression models under the family of scale mixture of Normal distributions, including Normal, Student-t, Pearson VII, Slash, or Contaminated Normal.

In addition to mixture models, there are approaches focusing on aft regression and semi-parametric survival models. For example, authors in [17] reviewed a semi-parametric aft model for the analysis of right censored data, and the spsurv package [25] provides tools for semi-parametric survival analysis.

Lastly, for aft models with unspecified error distribution, the aftgee package [8] is available and can provide robust solutions when parameter interpretability is not the main concern.

For a comprehensive list of packages that focus on cluster analysis and finite mixture models, refer to https://CRAN.R-project.org/view=Cluster.

To the best of our knowledge, there is currently no package available for variable selection in finite mixture of accelerated failure time regression models. The only package that focuses on variable selection in fmr models, although without censoring, is fmrlasso by [31]. However, this package is limited to the Least Absolute Shrinkage and Selection Operator (lasso) penalty and common tuning parameter. The package is implemented using R as the base code and functions. It is worth noting that packages written in R are often, if not always, less computationally efficient compared to those written in C for the same purpose. This inefficiency becomes more pronounced when analyzing large datasets.

There are several reasons why we have chosen to develop another software package for mixture models. Firstly, most of the previously mentioned packages primarily focus on non-regression mixture models. Secondly, with the exception of fmrlasso, none of them provide variable selection capabilities specifically for fmrs. Thirdly, none of the existing implementations address the case when the data are censored. Apart from these reasons, many of the mentioned packages are developed for specific applications and lack the flexibility to choose different subsets of variables for different components of the mixture model. Our package has been designed to address this limitation, allowing the inclusion of pre-specified subsets of covariates in the model. Additionally, our package offers the option for non-mixture regression as well.

To ensure standardized objects, the fmrs package is designed using S4 classes and methods [6, 7] and provides standard outputs. While S3 is simpler and easier to handle, S4 is a formal object-oriented system that allows for dispatching on multiple arguments and provides formal class definitions with helper functions for defining generics and methods. As all the base functions are implemented in C, the fmrs package offers reasonable speed. In this paper, all computations were performed using version 2.0.1 of the fmrs package and version 4.3.0 of R. Updates and future releases of the latest version of the fmrs package will be available on the Bioconductor - Open Source Software for Bioinformatics, at https://bioconductor.org/packages/fmrs/. An up-to-date version of this paper is also included as a vignette within the package.

The remainder of this paper is organized as follows. In the subsequent sections, we provide the theoretical background of fmrs, including estimation and variable selection methods. We catalog the functions, penalties, distributions, as well as a comprehensive list of arguments and controls implemented in the package. Furthermore, we offer illustrative guidelines on how to use the fmrs package by employing simulated datasets and comparing it with competing methods. We then present a real data analysis conducted on patients with lung cancer. Finally, we provide concluding remarks and outline the roadmap for future extensions of the fmrs package.

2 Models and Methods

We consider sparse estimation, i.e., estimation and variable selection, in two families of mixture models: fmaftr and fmr. We briefly describe each of these models and then present maximum likelihood estimation (mle) and maximum penalized likelihood estimation (mple).

2.1 Finite Mixture of Accelerated Failure Time Regression Models

Let X be the survival time with support $\mathcal{X}\subset {\mathbb{R}^{+}}$ and let $\boldsymbol{Z}={({Z_{1}},\dots ,{Z_{d}})^{\top }}\in {\mathbb{R}^{d}}$ be a vector of covariates that may have an effect on X. Define $T=\min \{Y,C\}$, where $Y=\log X$ and C is the logarithm of the censoring time, which is assumed to be noninformative and independent of X. Additionally, we use δ to denote the censoring indicator, i.e., $\delta =0$ if the time is censored. It is important to note that we do not directly observe X (or equivalently Y); instead, the observed data are $(T,\delta )$.

We say $V=(T,\delta ,\boldsymbol{Z})$ follows a finite mixture of aft regression models of order K if the conditional density of $(T,\delta )$ given $\boldsymbol{Z}=\boldsymbol{z}$ has the form:

Here, the ${\pi _{k}}$s ($0\lt {\pi _{k}}\lt 1$, with ${\textstyle\sum _{k=1}^{K}}{\pi _{k}}=1$) are the mixing probabilities, and ${f_{C}}(.)$ and ${S_{C}}(.)$ are the density and survival functions of C, respectively. Note that $f(.)$ and $S(.)$ are the density and survival functions of Y, where ${\theta _{k}}(\boldsymbol{z})=h({\beta _{0k}}+{\boldsymbol{z}^{\top }}{\boldsymbol{\beta }_{k}})$. In this equation, $h(.)$ is a known link function, ${\beta _{0k}}$ and ${\boldsymbol{\beta }_{k}}={({\beta _{k1}},{\beta _{k2}},\dots ,{\beta _{kd}})^{\top }}$ are the intercept and regression coefficients, respectively, and ${\sigma _{k}}$ is a dispersion parameter.

(2.1)

\[\begin{aligned}{}{f^{\ast }}(t,\delta ;\boldsymbol{z},\boldsymbol{\Psi })& ={\sum \limits_{k=1}^{K}}{\pi _{k}}{[f(t;{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})]^{\delta }}{[S(t;{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})]^{1-\delta }}\\ {} & \hspace{2em}\times {[{f_{C}}(t)]^{1-\delta }}{[{S_{C}}(t)]^{\delta }}.\end{aligned}\]It is worth noting that for each component of the mixture specified in (2.1), say the kth component, we have:

\[ Y=\log X=h({\beta _{0k}}+{\boldsymbol{z}^{\top }}{\boldsymbol{\beta }_{k}})={\beta _{0k}}+{\boldsymbol{z}^{\top }}{\boldsymbol{\beta }_{k}}+{\sigma _{k}}\epsilon .\]

Here, ϵ has a suitable distribution such as standard normal, extreme value, generalized extreme value, or logistic. A common aft model in survival analysis is based on the Log-Normal distribution [19] in which $\epsilon \sim N(0,1)$.The vector of all parameters is:

\[ \boldsymbol{\Psi }={({\beta _{01}},\dots ,{\beta _{0K}},{\boldsymbol{\beta }_{1}},\dots ,{\boldsymbol{\beta }_{K}},{\sigma _{1}},\dots ,{\sigma _{K}},{\pi _{1}},\dots ,{\pi _{K-1}})^{\top }},\]

which has a length of ${d^{\ast }}=K(d+3)-1$, increasing with the order of the mixture.Under the assumption of noninformative censoring,

2.2 Finite Mixture of Regression Models

Let Y be the response variable, and let $\boldsymbol{Z}={({Z_{1}},\dots ,{Z_{d}})^{\top }}$ be a vector of covariates that may have an effect on Y. We say that $(Y,\boldsymbol{Z})$ follows an fmr model of order K [24] if the conditional density of Y given $\boldsymbol{z}$ has the form:

It should be noted that for a given sample when all the observations are failure times, i.e., there is no censoring (${\delta _{i}}=1$), the density ${f^{\ast }}(t,\delta ;\boldsymbol{z},\boldsymbol{\Psi })$ in (2.2) is proportional to the density of a finite mixture of regression models. Therefore,

As a result, the mle of parameters of the fmr model is the same if we use the finite mixture of aft regression model with no censoring. Therefore, the fmrs package can also be used to analyze data using the fmr model.

(2.3)

\[ f(y;\boldsymbol{z},\boldsymbol{\Psi })={\sum \nolimits_{k=1}^{K}}{\pi _{k}}f(y;{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}}).\](2.4)

\[ {f^{\ast }}(y;\boldsymbol{z},\boldsymbol{\Psi })\propto {f^{\ast }}(t,\delta =1;\boldsymbol{z},\boldsymbol{\Psi }).\]3 Maximum Likelihood Estimation in FMRs

The log-likelihood of FMRs is given as

The expectation–maximization (EM) algorithm is often used in the mixture of distributions to estimate model parameters. The complete log-likelihood function is then given as

(3.1)

\[\begin{aligned}{}{\ell _{n}}(\boldsymbol{\Psi })& ={\sum \nolimits_{i=1}^{n}}\log {\sum \nolimits_{k=1}^{K}}{\pi _{k}}{\left[f({t_{i}};{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{{\delta _{i}}}}\\ {} & \hspace{2em}{\left[S({t_{i}};{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{1-{\delta _{i}}}}.\end{aligned}\]

\[\begin{aligned}{}{\ell _{n}^{c}}(\boldsymbol{\Psi })& ={\sum \nolimits_{i=1}^{n}}{\sum \nolimits_{k=1}^{K}}{u_{ik}}\left[\log {\pi _{k}}+\log \left\{{\left[f({t_{i}};{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{{\delta _{i}}}}\right.\right.\\ {} & \hspace{2em}\left.\left.{\left[S({t_{i}};{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{1-{\delta _{i}}}}\right\}\right],\end{aligned}\]

where ${u_{ik}}$ is the latent variable indicating the membership of the ith individual to kth component of fmrs [29]. Having established the complete log-likelihood function, we follow E- and M-step.In the E-step, ${\tau _{ik}^{(m)}}=E\left[{u_{ik}}|{\boldsymbol{\Psi }^{(m)}},{V_{1}},\dots ,{V_{n}}\right]$ is the conditional expectation of the unobserved variable ${u_{ik}}$, where ${V_{i}}=({T_{i}},{\delta _{i}},{\boldsymbol{Z}_{i}})$, and is computed as

The M-step follows by maximizing $Q(\boldsymbol{\Psi };{\boldsymbol{\Psi }^{(m)}})$ using the updated ${\pi _{k}^{(m)}}={\textstyle\sum _{i=1}^{n}}{\tau _{ik}^{(m)}}/n$, where

Depending on the form of the sub-distribution, a numerical method may be required to obtain the updated estimates of Ψ. For the mixture of Log-Normal aft and the mixture of Normal models, the M-step of the algorithm has a closed-form solution, as developed below.

(3.2)

\[ {\tau _{ik}^{(m)}}=\frac{{\pi _{k}^{(m)}}{\left[f({t_{i}};{\theta _{k}^{(m)}}({\boldsymbol{z}_{i}}),{\sigma _{k}^{(m)}})\right]^{{\delta _{i}}}}{\left[S({t_{i}};{\theta _{k}^{(m)}}({\boldsymbol{z}_{i}}),{\sigma _{k}^{(m)}})\right]^{1-{\delta _{i}}}}}{{\textstyle\textstyle\sum _{j=1}^{K}}{\pi _{j}^{(m)}}{\left[f({t_{i}};{\theta _{j}^{(m)}}({\boldsymbol{z}_{i}}),{\sigma _{j}^{(m)}})\right]^{{\delta _{i}}}}{\left[S({t_{i}};{\theta _{j}^{(m)}}({\boldsymbol{z}_{i}}),{\sigma _{j}^{(m)}})\right]^{1-{\delta _{i}}}}}.\](3.3)

\[\begin{aligned}{}Q(\boldsymbol{\Psi };{\boldsymbol{\Psi }^{(m)}})& ={\sum \nolimits_{i=1}^{n}}{\sum \nolimits_{k=1}^{K}}{\tau _{ik}^{(m)}}\log {\pi _{k}^{(m)}}\\ {} & \hspace{1em}+{\sum \nolimits_{i=1}^{n}}{\sum \nolimits_{k=1}^{K}}{\tau _{ik}^{(m)}}\Big[{\delta _{i}}\log f({t_{i}};{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})\\ {} & \hspace{1em}+(1-{\delta _{i}})\log S({t_{i}};{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})\Big].\end{aligned}\]3.1 M-Step for Maximizing $Q(.)$ Function in the Mixture of Normal and Mixture of AFT Log-Normal Distributions

Let ${\boldsymbol{\tau }_{k}^{(m)}}=\text{diag}\left\{{\tau _{ik}^{(m)}}:i=1,\dots ,n\right\}$, $k=1,\dots ,K$. Denote the pseudo-survival times as:

where ${\omega _{ik}^{(m)}}=({t_{i}}-{\boldsymbol{z}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{(m)}})/{\sigma _{k}^{(m)}}$, $A(\omega )=\phi (\omega )/(1-\Phi (\omega ))$, and $\phi (.)$ and $\Phi (.)$ are the density and cumulative distribution functions of $N(0,1)$, respectively. For each $k=1,2,\dots ,K$, let ${\boldsymbol{T}_{k}^{(m)}}={({t_{1k}^{(m)}},{t_{2k}^{(m)}},\dots ,{t_{nk}^{(m)}})^{\top }}$, and let $\mathcal{Z}={({\boldsymbol{z}_{1}},{\boldsymbol{z}_{2}},\dots ,{\boldsymbol{z}_{n}})^{\top }}$ be the $n\times d$ dimensional design matrix. The updated estimates of the parameters for the mixture of Log-Normal aft models (when t is actually the logarithm of t) and the mixture of Normal models for $k=1,2,\dots ,K$ are given by

and

(3.4)

\[ {t_{ik}^{(m)}}={\delta _{i}}{t_{i}}+(1-{\delta _{i}})\left\{{\boldsymbol{z}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{(m)}}+{\sigma _{k}^{(m)}}A({\omega _{ik}^{(m)}})\right\},\](3.5)

\[ {\boldsymbol{\beta }_{k}^{(m+1)}}={\left({\mathcal{Z}^{\top }}{\boldsymbol{\tau }_{k}^{(m)}}\mathcal{Z}\right)^{-1}}{\mathcal{Z}^{\top }}{\boldsymbol{\tau }_{k}^{(m)}}{\boldsymbol{T}_{k}^{(m)}},\](3.6)

\[ {\sigma _{k}^{(m+1)}}=\sqrt{\frac{{\textstyle\textstyle\sum _{i=1}^{n}}{\tau _{ik}^{(m)}}{({t_{ik}^{(m)}}-{\boldsymbol{z}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{(m)}})^{2}}}{{\textstyle\sum \limits_{i=1}^{n}}{\tau _{ik}^{(m)}}\left[{\delta _{i}}+(1-{\delta _{i}})\{A({\omega _{ik}^{(m)}})[A({\omega _{ik}^{(m)}})-{\omega _{ik}^{(m)}}]\}\right]}}.\]3.2 M-Step for Maximizing $Q(.)$ Function in Mixture of AFT Weibull Distributions

There is no closed-form solution for parameter estimation in the Weibull distribution. Hence, we use a numerical method such as the Newton-Raphson algorithm [29]. The iterative algorithm is basically given as

\[ {\boldsymbol{\beta }^{\text{new}}}={\boldsymbol{\beta }^{\text{old}}}-{I^{-1}}({\boldsymbol{\beta }^{\text{old}}})\hspace{2.5pt}U({\boldsymbol{\beta }^{\text{old}}}),\]

where U and I are the first and second derivative functions of the log-likelihood, respectively, evaluated at ${\boldsymbol{\beta }^{\text{old}}}$.Let ${Y^{\ast }}$ follow a Weibull distribution. Then $y=\log {y^{\ast }}$ follows the extreme value distribution with the probability density function (pdf) and cumulative distribution function (cdf) given by

\[ f(y;{\boldsymbol{x}^{\top }}\boldsymbol{\beta },\sigma )\hspace{-0.1667em}=\hspace{-0.1667em}\frac{1}{\sigma }\exp \hspace{-0.1667em}\left(\frac{y-{\boldsymbol{x}^{\top }}\boldsymbol{\beta }}{\sigma }\right)\exp \hspace{-0.1667em}\left(-\exp \hspace{-0.1667em}\left(\frac{y-{\boldsymbol{x}^{\top }}\boldsymbol{\beta }}{\sigma }\right)\right)\]

and

\[ F(y;{\boldsymbol{x}^{\top }}\boldsymbol{\beta },\sigma )=1-\exp \left(-\exp \left(\frac{y-{\boldsymbol{x}^{\top }}\boldsymbol{\beta }}{\sigma }\right)\right),\]

respectively. For the kth component, we have

\[ U({\beta _{0k}};{\boldsymbol{\beta }_{k}^{\text{old}}},\boldsymbol{y})=-{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}{\delta _{i}}}{{\sigma _{k}^{\text{old}}}}+{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}{e^{\left(\frac{{y_{i}}-{\boldsymbol{x}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}\right)}},\]

and

\[ U({\beta _{jk}};{\boldsymbol{\beta }_{k}^{\text{old}}},\boldsymbol{y})=-{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}{\delta _{i}}}{{\sigma _{k}^{\text{old}}}}{x_{ij}}+{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}{x_{ij}}{e^{\left(\frac{{y_{i}}-{\boldsymbol{x}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}\right)}},\]

for $j=1,\dots ,d$. For the second derivatives, we have

\[ I({\beta _{jk}},{\beta _{rk}};{\boldsymbol{\beta }_{k}^{\text{old}}},\boldsymbol{y})=-{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}{x_{ij}}{x_{ir}}\exp \left(\frac{{y_{i}}-{\boldsymbol{x}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}\right)\]

and

\[ I({\beta _{jk}},{\beta _{0k}};{\boldsymbol{\beta }_{k}^{\text{old}}},\boldsymbol{y})=-{\sum \limits_{i=1}^{n}}\frac{{t_{ik}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}{x_{ij}}\exp \left(\frac{{y_{i}}-{\boldsymbol{x}_{i}^{\top }}{\boldsymbol{\beta }_{k}^{\text{old}}}}{{\sigma _{k}^{\text{old}}}}\right),\]

for $j,r=1,\dots ,d$, and

It is widely acknowledged that finite mixture models, specifically finite mixtures of regression models, are identifiable up to a permutation [14, 9]. As a consequence, in practical applications, it is common for the estimated components to deviate from the order of the simulation setup or the true order in the population (which remains unknown). To establish the correct order, it becomes necessary to possess some knowledge about the regression coefficients’ locations and select initial values accordingly. When analyzing real data, one possible approach to rearranging the components is to consider the order of their grand means.

4 Variable Selection in FMRs

Having the current estimates ${\boldsymbol{\Psi }^{(m)}}$, the penalized $Q(.)$ function is given as

where the penalty is replaced by the local quadratic approximation

where

(4.1)

\[\begin{aligned}{}Q(\boldsymbol{\Psi };{\boldsymbol{\Psi }^{(m)}})& ={\sum \nolimits_{i=1}^{n}}{\sum \nolimits_{k=1}^{K}}{\tau _{ik}^{(m)}}\log {\pi _{k}^{(m)}}\\ {} & \hspace{1em}+{\sum \nolimits_{i=1}^{n}}{\sum \nolimits_{k=1}^{K}}{\tau _{ik}^{(m)}}\Big[{\delta _{i}}\log {f_{Y}}({t_{i}};{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})\\ {} & \hspace{1em}+(1-{\delta _{i}})\log {S_{Y}}({t_{i}};{\theta _{k}}(\boldsymbol{z}),{\sigma _{k}})\Big]-{\mathbf{p}_{{\boldsymbol{\lambda }_{n}}}}(\boldsymbol{\Psi }),\end{aligned}\]

\[\begin{aligned}{}{\mathbf{p}_{{\boldsymbol{\lambda }_{n}}}}(\boldsymbol{\Psi })& \approx {\mathbf{p}_{{\boldsymbol{\lambda }_{n}}}}(\boldsymbol{\Psi };{\boldsymbol{\Psi }^{(m)}})=n{\sum \limits_{k=1}^{K}}{\pi _{k}^{(m)}}{\sum \limits_{j=1}^{d}}\Big\{{p_{{\lambda _{n,k}}}}({\beta _{kj}^{(m)}})\\ {} & \hspace{1em}+\frac{{p^{\prime }_{{\lambda _{n,k}}}}({\beta _{kj}^{(m)}})}{2{\beta _{kj}^{(m)}}}\left({\beta _{kj}^{2}}-{({\beta _{kj}^{(m)}})^{2}}\right)\Big\}.\end{aligned}\]

The maximum penalized likelihood estimator (mple), when the sub-distributions are log-normal, is then given as

(4.2)

\[ {\boldsymbol{\beta }_{k}^{(m+1)}}={\left({\mathcal{Z}^{\top }}{\boldsymbol{\tau }_{k}^{(m)}}\mathcal{Z}+{\boldsymbol{\Sigma }_{k}}({\boldsymbol{\beta }_{k}^{(m)}})\right)^{-1}}{\mathcal{Z}^{\top }}{\boldsymbol{\tau }_{k}^{(m)}}{\boldsymbol{T}_{k}^{(m)}},\]

\[ {\boldsymbol{\Sigma }_{k}}({\boldsymbol{\beta }_{k}^{(m)}})\hspace{-0.1667em}=\hspace{-0.1667em}\text{diag}\hspace{-0.1667em}\left\{n{\pi _{k}^{(m+1)}}{p^{\prime }_{{\lambda _{n}},k}}({\beta _{kj}^{(m)}})/{\beta _{kj}^{(m)}}\hspace{-0.1667em}:j\hspace{-0.1667em}=\hspace{-0.1667em}1,2,\dots ,d\right\}\]

and ${\sigma _{k}^{(m+1)}}$ is equal to (3.6) when replacing (4.2) in (3.6). Having specified a threshold, the elements of ${\boldsymbol{\beta }_{k}^{(m+1)}}$ that fall below the threshold will be set to zero, which leads to variable selection [29]. Note that for Weibull, the NR algorithm must be carried out in the M-step of the EM algorithm. Algorithm 3 in Appendix A provides instructions to obtain mples.It is known that the fitted model of fmrs depends on the choice of initial values. Furthermore, it is argued that the mle could be a set of good initial values to obtain mples. The following penalty functions are implemented in the fmrs package:

where ${p^{\prime }_{n}}(\cdot ;\lambda )$ is the first derivative of penalty with respect to θ and ${(x)_{+}}=\max \{0,x\}$.

-

• lasso: $\frac{{p_{n}}(\theta ;\lambda )}{{n^{2}}}=\lambda |\theta |$;

-

• adaptive lasso: $\frac{{p_{n}}(\theta ;\lambda )}{{n^{2}}}=\lambda w|\theta |$, for some (possibly random) known weights w;

-

• The Minimax Concave Penalty (mcp):$\frac{{p^{\prime }_{n}}(\theta ;\lambda )}{{n^{2}}}=\operatorname{sgn}(\theta )\hspace{2.5pt}\frac{{(a\lambda -|\theta |)_{+}}}{a}$;

-

• Smoothly Clipped Absolute Deviation (scad):$\frac{{p^{\prime }_{n}}(\theta ;\lambda )}{{n^{2}}}=\operatorname{sgn}(\theta )\left\{\lambda I(|\theta |\le \lambda )+\frac{{(a\lambda -|\theta |)_{+}}}{a-1}I(|\theta |\gt \lambda )\right\}$,

4.1 Choice of Tuning Parameters

Two approaches are available for choosing tuning parameters: the common approach and the component-wise approach. If the common tuning parameter approach is adopted, one can choose the value that minimizes the bic from a set of candidates within the interval $(0,{\lambda _{\text{max}}}]$, where ${\lambda _{\text{max}}}$ is a pre-specified value. This approach is suitable for datasets with sufficiently large sample sizes, and it reduces the computational burden when a common tuning parameter is used.

On the other hand, if we adopt the component-wise approach, we will search for the optimal values $({\lambda _{1}},\dots ,{\lambda _{K}})$ from a set of candidate values derived from the interval $(0,{\lambda _{\text{max}}}]$. We choose the combination that minimizes the component-wise bic, which is defined below.

Let ${\tilde{\tau }_{ik}}$ be the mle in (3.2). For a given k, the component-wise log-likelihood is defined as

(4.3)

\[\begin{aligned}{}{\tilde{\ell }_{nk}}({\boldsymbol{\Psi }_{k}})& ={\sum \nolimits_{i=1}^{n}}{\tilde{\tau }_{ik}}\log \left\{{\left[{f_{Y}}({t_{i}},{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{{\delta _{i}}}}\right.\\ {} & \hspace{2em}\left.{\left[{S_{Y}}({t_{i}},{\theta _{k}}({\boldsymbol{z}_{i}}),{\sigma _{k}})\right]^{1-{\delta _{i}}}}\right\}.\end{aligned}\]Let ${\widehat{\boldsymbol{\Psi }}_{k}}({\lambda _{k}})$ be the mple under (4.3) for a given ${\lambda _{k}}$; i.e.,

where ${n_{k}}={\textstyle\sum _{i=1}^{n}}{\tilde{\tau }_{ik}}$, $\text{DF}({\lambda _{k}})={\textstyle\sum _{j=1}^{d}}I({\hat{\beta }_{kj}}\ne 0)$ is the number of estimated non-zero coefficients, and ${\tilde{\ell }_{nk}}$ is evaluated at ${\widehat{\boldsymbol{\Psi }}_{k}}({\lambda _{k}})$. The component-wise tuning parameter is then chosen as

\[ {\widehat{\boldsymbol{\Psi }}_{k}}({\lambda _{k}})=\arg \underset{{\boldsymbol{\Psi }_{k}}}{\max }\left[{\tilde{\ell }_{nk}}({\boldsymbol{\Psi }_{k}})-n{\pi _{k}}{\sum \nolimits_{j=1}^{d}}{p_{{\lambda _{k}}}}(|{\beta _{jk}}|)\right].\]

We define the component-wise bic as

(4.4)

\[ {\text{BIC}_{k}}({\lambda _{k}})=-2{\tilde{\ell }_{nk}}({\widehat{\boldsymbol{\Psi }}_{k}}({\lambda _{k}}))+\text{DF}({\lambda _{k}})\times \log {n_{k}},\]

\[ {\hat{\lambda }_{k}}={\arg \min _{{\lambda _{k}}\in (0,{\lambda _{\max }}]}}{\text{BIC}_{k}}({\lambda _{k}}),\hspace{2.5pt}\text{for}\hspace{2.5pt}k=1,\dots ,K.\]

By choosing a grid of values in the interval $(0,{\lambda _{\text{max}}}]$ with a length of L, the total number of searches required to find K tuning parameters is reduced to $K\times L$. In contrast, using the non-component-wise approach would require ${L^{K}}$ searches to find K tuning parameters. By adopting the component-wise approach, we significantly reduce the number of searches. It is important to note that although this approach has not been theoretically studied, our simulations have shown promising results. Algorithm 2 in Appendix A provides instructions for obtaining the tuning parameters.4.2 Choice of Mixture Order

So far, we assumed that the order of fmrs is known a priori. However, in many real applications, such information is not available. For order selection in mixture model setups, information criteria such as bic have been extensively studied [24, 14]. In fmrs, we suggest using bic for order selection. Consider the possible values $K\in \{1,\dots ,{K_{\max }}\}$ for the order of mixture, where ${K_{\max }}$ is a pre-specified upper bound. The optimal order, denoted as $\hat{K}$, is chosen as the one that minimizes the quantity

\[\begin{aligned}{}{\text{BIC}^{\ast }}(K)& =-2{\ell _{n}}({\widehat{\boldsymbol{\Psi }}_{n,K}})\\ {} & \hspace{1em}+\left[(3K-1)+{\sum \nolimits_{k=1}^{K}}{\sum \nolimits_{j=1}^{d}}\text{I}({\hat{\beta }_{jk}}\ne 0)\right]\log n,\end{aligned}\]

where ${\ell _{n}}$ in (3.1) is evaluated at ${\widehat{\boldsymbol{\Psi }}_{n,K}}$, the vector of estimated parameters with order K. Note that this approach has yet to be theoretically studied. In simulation studies, however, promising results are observed.5 Preliminaries and Main Functions

In the following subsections, we present comprehensive lists of all available arguments and provide detailed descriptions of the main functions in the fmrs package.

5.1 Preliminaries

The command ‘help(package = “fmrs”)’ returns a list of all available arguments in fmrs. The basic functions are implemented in the C programming language, which greatly improves memory management and package speed. The package utilizes S4 objects and methods. Table 1 presents a list of all S4 classes along with their descriptions. Table 2 provides a comprehensive list of S4 methods and functions, including their arguments and associated default values. Table 3 displays a list of arguments, controls, and notations, along with their default values and descriptions. Currently, the fmaftr supports Log-Normal and Weibull distributions, while the fmr includes the Normal distribution in the package.

Table 1

List of S4 classes in the fmrs package.

| class | Slot | Value | Description |

| fmrsfit | y | A length-nobs numeric vector | Response vector |

| delta | A length-nobs numeric vector | Censoring vector | |

| x | A dimension-nobs-ncov numeric matrix | Covariates | |

| nobs | A length-one numeric vector | Number of observations | |

| ncov | A length-one numeric vector | Number of covariates | |

| ncomp | A length-one numeric vector | Order of the mixture | |

| coefficients | A length-(ncov+1)-ncomp numeric matrix | Fitted regression coefficients | |

| dispersion | A length-ncomp numeric vector | Fitted dispersions | |

| mixProp | A length-ncomp numeric vector | Fitted mixing proportions | |

| logLik | A length-one numeric vector | Log-likelihood | |

| BIC | A length-one numeric vector | Bayesian information criteria | |

| nIterEMconv | A length-one numeric vector | Number of the EM algorithm iterations | |

| disFamily | A length-one character vector | Name of sub-distributions | |

| penFamily | A length-one character vector | Penalty function | |

| lambPen | A length-ncomp numeric vector | Tuning parameters | |

| lamRidge | A length-one numeric vector | Ridge tuning parameter | |

| MCPGam | A length-one numeric vector | mcp’s extra tuning parameter | |

| SICAGam | A length-one numeric vector | sica’s extra tuning parameter | |

| model | A length-one character vector | Fitted model | |

| fitted | A dimension-nobs-ncomp numeric matrix | Predicted values | |

| residuals | A dimension-nobs-ncomp numeric matrix | Predicted residuals | |

| weights | A dimension-nobs-ncomp numeric matrix | Predicted weights | |

| activeset | A dimension-(ncov+1)-ncomp matrix | Parameters that must be active in model | |

| selectedset | A dimension-(ncov)-ncomp matrix | Parameters selected via variable selection | |

| fmrstunpar | ncomp | A length-one numeric vector | Order of mixture |

| ncov | A length-one numeric vector | Number of covariates | |

| lambPen | A length-ncomp numeric vector | Tuning parameters | |

| MCPGam | A length-ncomp numeric vector | mcp’s extra tuning parameters | |

| SICAGam | A length-ncomp numeric vector | sica’s extra tuning parameters | |

| disFamily | A length-one character vector | Name of sub-distributions | |

| penFamily | A length-one character vector | Penalty function | |

| lamRidge | A length-one numeric vector | Ridge tuning parameter | |

| model | A length-one character vector | Fitted model | |

| activeset | A dimension-(ncov+1)-ncomp matrix | Parameters that must be active in model |

Table 2

List of S4 methods and functions in the fmrs package.

| Generic Name | Description |

| fmrs.gendata | Generates a dataset from FMRs under the specified setting. |

| fmrs.mle | Performs mle and ridge regression for FMRs. |

| fmrs.tunsel | Computes component-wise tuning parameters based on a bic for FMRs. |

| fmrs.varsel | Performs variable selection and computes penalized mle for FMRs. |

| BIC | Provides the estimated bic of an FMRs from an fmrsfit-class |

| coefficients | Provides the estimated regression coefficients from the FMRs from an fmrsfit-class |

| dispersion | Provides the estimated dispersions of the fitted FMRs from an fmrsfit-class |

| fitted | Provides the fitted response of the fitted FMRs from an fmrsfit-class |

| logLik | Provides the estimated logLikelihood of an FMRs from an fmrsfit-class |

| mixProp | Provides the estimated mixing proportions of an FMRs from an fmrsfit-class |

| ncomp | Provides the order of an FMRs from an fmrsfit-class |

| ncov | Provides the number of covariates of an FMRs from an fmrsfit-class |

| nobs | Provides the number of observations in an FMRs from an fmrsfit-class |

| residuals | Provides the residuals of the fitted FMRs from an fmrsfit-class |

| summary | Displays estimated coefficients, dispersions, and mixing proportions or selected tuning parameters |

| weights | Provides the weights of fitted observations for each observation under all components of an FMRs model |

Table 3

List of arguments, controls and notations in the fmrs package.

| Name | Default | Description |

| Arguments | ||

| y | Response vector | |

| delta | Censoring indicator vector | |

| x | Design matrix (covariates) | |

| nObs | A numeric value represents the number of observations (sample size) | |

| nComp | 2 | A numeric value represents the order of mixture in FMRs |

| nCov | A numeric value represents the number of covariates in design matrix | |

| mixProp | A vector of mixing proportions which their sum must be one | |

| rho | A numeric value in [-1, 1] which represents the correlation between covariates of design matrix | |

| dispersion | A vector of positive values for dispersion parameters of sub-distributions in FMRs | |

| coeff | A vector of length nComp*(nCov+1) of all regression coefficients including intercepts. | |

| umax | A numeric value that represents the upper bound in Uniform distribution for censoring | |

| disFamily | "lnorm" | A sub-distribution family. The choices are "norm" for fmr, "lnorm" for fmaftr with Log-Normal |

| sub-distribution,"weibull" for fmaftr with Weibull sub-distribution. | ||

| Controls | ||

| conveps | 1e-08 | A positive number for avoiding NaN in computing divisions |

| convepsEM | 1e-08 | A positive value for threshold of convergence in the EM algorithm |

| convepsNR | 1e-08 | A positive value for threshold of convergence in the Newton-Raphson algorithm |

| gamMixPor | 1 | Proportion of mixing parameters in the penalty function. The value must belong to the interval $[0,1]$. |

| If gamMixPor = 0, the penalty structure is no longer a mixture. | ||

| initCoeff | A vector of initial values for coefficients including intercepts | |

| initmixProp | A vector of initial values for the proportion of components | |

| initDispersion | A vector of initial values for standard deviations | |

| lambPen | A vector of lambda for penalty | |

| lambRidge | 0 | Lambda for the ridge penalty or Elastic Net |

| lambMCP | Extra tuning parameter for the mcp penalty | |

| lambSICA | 5 | Extra tuning parameter for the sica penalty |

| LambMin | 0.01 | A positive value for the minimum value of tuning parameters |

| LambMax | 1.0 | A positive value for the maximum value of tuning parameters |

| nLamb | 100 | An integer for the number of tuning parameters between LambMin and LambMax |

| nIterEM | 400 | Maximum number of iterations for the EM algorithm |

| nIterNR | 2 | Maximum number of iterations for the Newton-Raphson algorithm |

| penFamily | "lasso" | The penalty used in variable selection method. The available options are "lasso", "adplasso", "mcp", |

| "scad", "sica" and "hard". | ||

| NRpor | 2 | A positive integer for the maximum number of searches in the Newton-Raphson algorithm |

| activeset | A 0-1 matrix that shows which coefficients must be active in the model. This could be an oracleset | |

| as well. | ||

| cutpoint | 0.05 | A positive integer for setting the estimates to zero if they are too small. |

| Notations | ||

| FMRs | Finite Mixture of Regression Models including fmaftr and FMR | |

| fmaftr | Finite Mixture of Accelerated Failure Time Regression | |

| FMR | Finite Mixture of Regression |

5.2 Description of the Main Functions

The fmrs package has two main classes and four main functions. The classes are as follows:

The class fmrsfit is designed to store fitted models obtained through mle and mple using the functions fmrs.mle and fmrs.varsel. It stores various information such as the response variable, design matrix, censoring information, parameter estimates, fitted values, posterior probabilities, and evaluation criteria like log-likelihood and bic. The R function summary provides a standard output of this information.

The class fmrstunpar is introduced to store tuning parameters obtained using the component-wise bic approach in (4.4), which can be utilized in the fmrs.varsel function.

The package introduces 17 functions, with four of them being the main functions. The arguments for these functions are described in Tables 1-3. Below, we provide a brief introduction to these functions.

The R function fmrs.gendata is used to simulate a dataset from fmrs. It has the following form:

fmrs.gendata(nObs, nComp, nCov, coeff,

dispersion, mixProp, rho, umax, disFamily, ...),

where nObs, nComp, nCov, coeff, dispersion, mixProp and disFamily represent the sample size, the order of fmrs, the number of regression covariates, the regression coefficients, the dispersions of errors, the mixing proportions, and the distribution of components of fmrs, respectively.

It is important to note that rho (i.e., ρ) is used in the variance-covariance matrix to simulate the design matrix X from a multivariate Gaussian distribution with mean $\mathbf{0}$ and variance-covariance matrix ${\Sigma _{X}}=({\rho ^{|l-m|}})$. The right censoring times are generated using a Uniform distribution with lower and upper bounds of 0 and umax, respectively. Depending on the choice of disFamily, the function fmrs.gendata generates a dataset from fmaftr or fmr. The default value is disFamily = "lnorm". If disFamily = "norm", the function ignores the censoring parameter umax and generates a dataset from fmr with Normal sub-distributions. On the other hand, if disFamily = "lnorm" or disFamily = "weibull", the function produces a dataset from fmaftr with Log-Normal or Weibull sub-distribution. Consequently, fmrs.gendata returns a list containing a vector of responses y, a matrix of covariates x, a vector of censoring indicators delta, and the name of the sub-distributions of the mixture model.

The C function fmrs.mle returns the mle for the parameters of fmrs. It has the following form:

fmrs.mle(y, delta, x, nComp, disFamily,

initCoeff, initDispersion, initmixProp,

oracleset, ... ).

Here, delta, initCoeff, initDispersion, initmixProp, and oracleset represent the censoring indicators, the initial values for regression coefficients, the dispersions, and the mixing proportions, and the set of oracle covariates that should be included in each component of the mixture model, respectively. The remaining arguments in fmrs.mle are controlling parameters.

This function returns a fitted fmrs model that includes the mle of regression parameters, standard deviations, and mixing proportions based on the EM algorithm. The output also includes the log-likelihood and bic for the fitted model, the maximum number of iterations used in the EM algorithm, and the type of the fitted fmrs (i.e., FMAFTR or FMR). To perform Ridge regression, a positive value must be chosen for lambRidge, which is the tuning parameter of the Ridge penalty.

The default values for the arguments are as follows: nComp = 2, disFamily = "lnorm", lambRidge = 0, nIterEM = 400, nIterNR = 2, conveps = 1e-08, convepsEM = 1e-08, convepsNR = 1e-08, and NRpor = 2.

The C function fmrs.tunsel is used to search for a data-driven tuning parameter from a selected set of values. It has the following form:

fmrs.tunsel(y, delta, x, nComp, disFamily,

initCoeff, initDispersion, initmixProp,

penFamily, lambRidge, oracleset, lambMCP,

lambSICA, LambMin, LambMax, nLamb, ...).

Here, penFamily, lambMCP, and lambSICA represent the penalty function, the hyper-parameter for mcp, and the hyper-parameter for sica penalty. Additionally, LambMin, LambMax, and nLamb specify the minimum, maximum, and the number of tuning parameters used to obtain the optimal tuning parameter based on the component-wise tuning parameter selection approach. The function returns an fmrstunpar class.

The default values for the arguments are as follows: disFamily = "lnorm", penFamily = "lasso", lambRidge = 0, nIterEM = 400, nIterNR = 2, conveps = 1e-08, convepsEM = 1e-08, convepsNR = 1e-08, NRpor = 2, gamMixPor = 1, cutpoint = 0.05, LambMin = 0.01, LambMax = 1, and nLamb = 100.

The C function fmrs.varsel is used to perform variable selection (mple) for the parameters of fmrs. It has the following form:

fmrs.varsel(y, delta, x, nComp, disFamily,

initCoeff, initDispersion, initmixProp,

penFamily, lambPen, lambRidge, oracleset,

lambMCP, lambSICA, ... ).

Here, lambPen represents the set of tuning parameters for the penalty function. The function returns an fmrstfit class that stores the values of mple for the regression parameters.

The default values for the arguments are as follows: disFamily = "lnorm", penFamily = "lasso", lambRidge = 0, nIterEM = 2000, nIterNR = 2, conveps = 1e-08, convepsEM = 1e-08, convepsNR = 1e-08, NRpor = 2, gamMixPor = 1, and cutpoint = 0.05.

In addition to the main functions, we have introduced several auxiliary functions to extract and report the results obtained from the main functions. These functions are listed in Table 2. One such example is the summary function, which summarizes the results of all functions in a standard manner.

6 The fmrs Package in Action

6.1 Example 1: FMAFTR Model with Log-Normal Sub-Distributions

In order to illustrate the application of fmrs, we begin by generating a dataset from an fmaft model. It is worth noting that for a comprehensive simulation study, users can refer to [29].

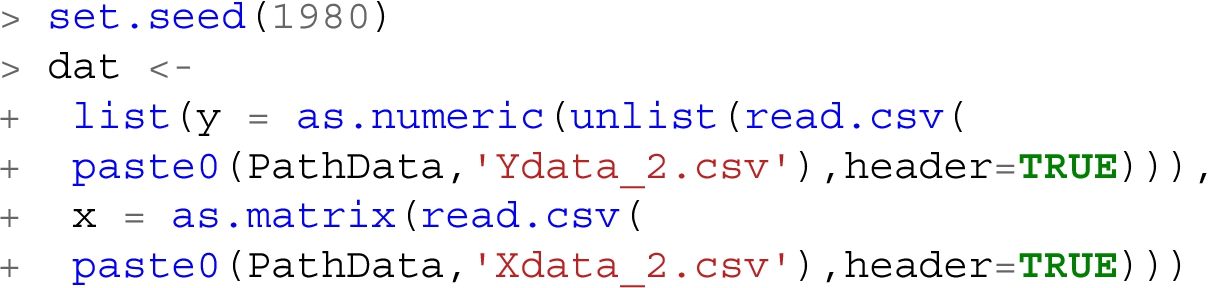

We generate the covariates from a multivariate normal distribution with a dimension of 10 and a sample size of 500. The mean vector is set to $\mathbf{0}$, and the variance-covariance matrix is $\Sigma =({0.25^{|l-m|}})$. Subsequently, we simulate time-to-event data from a finite mixture of two components using aft regression models with Log-Normal sub-distributions. We load the necessary libraries and assign the parameters of the model. The parameter values chosen for this simulation are provided in the following code:

One can use fmrs.gendata to generate data from an fmaftr model as follows:

With the simulated dataset in hand, we proceed to estimate the mles of the model parameters using the fmrs.mle function. It is worth noting that the initial values for the regression parameters are generated from a standard normal distribution.

It is evident that the mles of the regression coefficients are not equal to zero. As a result, the mle approach alone cannot provide a sparse solution. To achieve sparsity, we utilize the variable selection method developed by [29]. Once we obtain the mles, the next step is to determine a set of suitable tuning parameters. This can be accomplished by employing the component-wise approach implemented in the fmrs.tunsel function. However, in certain scenarios, it is worthwhile to explore whether the common tuning parameter approach yields superior results. This can be investigated through data-driven simulation studies, for example.

We have utilized the mle estimates as initial values to obtain the tuning parameters. In this phase, the same set of values is employed to conduct variable selection with an adaptive lasso penalty.

6.1.1 Common Tuning Parameters

If we desire to select a common tuning parameter, there is no need to execute any additional functions after fmrs.mle(.). Instead, we can employ a for-loop command to search for the optimal fit and the common tuning parameter using fmrs.varsel(.). The following code demonstrates an example of this process.

6.2 Example 2: FMR Model with Normal Sub-Distributions

As mentioned in Section 2, the mle and mple of an fmr model can be obtained by disregarding the censoring in the fmaftr setting. We select the following parameters to generate the data from an fmr model.

By specifying "norm" for disFamily in fmrs.gendata, we generate a dataset from an fmr model using the following code:

Similar to the above, we use Python to generate the data.

The mle of the fmr model parameters are obtained as follows:

The following code is used in selecting the component-wise tuning parameters:

Having selected the tuning parameters, we perform variable selection in fmr as follows:

6.3 Example 3: Comparison with the Existing Methods

We conducted a simulation study to compare the performance of fmrs with existing methods. Currently, fmrlasso is the only method available that offers a variable selection in fmr, and there is no package providing variable selection in fmaftr.

In our simulation study, we consider the model ${y_{i}}={\mathbf{x}_{i}}\boldsymbol{\beta }+{e_{i}}$, $i=1,\dots ,n$, where ${e_{i}}\stackrel{iid}{\sim }N(0,{\sigma ^{2}})$. For the fmr model, we set $K=2$, $n=500$, $d=5$, $\pi =[0.4,0.6]$, $\rho =0.25$, $\sigma =[1,1]$, ${\boldsymbol{\beta }_{1}}={[-1,2,0,0,-1,2]^{\top }}$, ${\boldsymbol{\beta }_{2}}={[2,1,1,0,0,0]^{\top }}$.

We generated $r=100$ datasets and evaluated the performance. The simulation results are presented in Table 4. The results demonstrate that fmrs outperforms fmrlasso in terms of correctly identifying zero or non-zero coefficients. The codes are given in the supplementary materials.

Table 4

Percentage of correctly identified regression coefficients as zero or non-zero.

| Package | ${\beta _{11}}$ | ${\beta _{21}}$ | ${\beta _{31}}$ | ${\beta _{41}}$ | ${\beta _{51}}$ | ${\beta _{12}}$ | ${\beta _{22}}$ | ${\beta _{32}}$ | ${\beta _{42}}$ | ${\beta _{52}}$ |

| fmr | 100 | 100 | 88 | 89 | 89 | 100 | 66 | 77 | 100 | 100 |

| fmrlasso | 100 | 100 | 63 | 55 | 54 | 100 | 47 | 61 | 100 | 100 |

It is worth noting that fmrs significantly outperforms fmrlasso in terms of computational speed. In the aforementioned simulation study, where bic was used, fmrs was found to be over 20 times faster than fmrlasso. Moreover, unlike fmrlasso, fmrs provides variable selection capabilities for various penalties such as mcp, scad, and others. Additionally, fmrs can handle variable selection in the presence of censored observations, which is a limitation of fmrlasso.

6.4 Example 4: Non-Mixture Models

As stated by the reviewers, the non-mixture versions of the models implemented in fmrs are highly beneficial for researchers. Therefore, we have added these models to the package. The users need to specify $nComp=1$ to fit such models. A sample code for the accelerated failure time regression model is given as follows:

7 Analyzing Lung Cancer Data

In this section, we analyze lung cancer data obtained from the survival package [22]. The dataset consists of information from 228 subjects with 7 covariates. The data includes the survival time of patients with lung cancer along with their censoring status. The following information is available for each subject:

-

• time: Survival time in days;

-

• status: censoring status 1=censored, 2=dead;

-

• age: Age in years;

-

• sex: Male=1 Female=2;

-

• ph.ecog: Eastern Cooperative Oncology Group (ECOG) performance status (0=good 5=dead);

-

• ph.karno: Karnofsky performance score (bad=0-good=100) rated by physician;

-

• pat.karno: Karnofsky performance score as rated by the patient;

-

• meal.cal: Calories consumed at meals;

-

• wt.loss: Weight loss in the last six months.

In our analysis, we first remove the variable meal.cal due to a large number of missing values. Next, we exclude subjects with missing information, resulting in a reduced number of subjects (patients) to 210. The analysis includes the remaining 6 covariates.

We perform variable selection using fmrs. We fit fmaftr models of order K ranging from 2 to 5 using the order selection technique described in Section 4.2 and the component-wise technique described in Section 4.1. We use the following parameter values: cutpoint = 0.001, LambMin = 0.001, LambMax = 1, and nLamb = 1000. The code is given as follows:

The results show that a mixture of two components is selected, with approximately 69% of the patients classified in Component 1. In Component 1, the variables sex, ph.karno, and pat.karno are selected with positive effects. On the other hand, in Component 2, the variables age, ph.ecog, and ph.karno are selected with negative effects. Component 1 represents a more aggressive form of the disease, characterized by a lower survival time (see Figure 1).

8 Concluding Remarks

We have developed the R package fmrs to perform sparse estimation in finite mixture models, including the finite mixture of accelerated failure time and the finite mixture of regression models. The main functions in the package are implemented in C language to enhance computational efficiency. The R functions are written using S4-methods. The package also includes ridge regression, and it implements various penalty functions. Our tests show that the fmrs package outperforms fmrlasso in terms of computational time.

Censoring is a crucial aspect of time-to-event data, and ignoring it can significantly deteriorate the performance of variable selection methods. Additionally, heterogeneity of effects is common in many time-to-event datasets, and ignoring it can lead to misleading analyses [29].

The classical statistical theory assumes the validity of statistical inference (tests and confidence intervals) when model selection and model fitting are performed separately [3]. If the focus of data analysis is solely on mle and statistical inference such as goodness-of-fit tests, one can obtain the variance-covariance matrix from the Hessian matrix and perform inference accordingly. However, variable selection methods produce stochastic models, which invalidate classical inferences. Therefore, post-selection methods must be developed to address this issue, which is beyond the scope of this paper.

We recommend users repeat the EM algorithm with different initialization. The best initialization is the one that yields the highest likelihood value. It is worth noting that several initialization strategies have been proposed in the literature [4].

The method presented in [29] is suitable for scenarios with a small number of variables (p) and a large number of observations (n). In cases where the total number of parameters (${p^{\ast }}=K(p+3)-1$) approaches or exceeds n, one may utilize the fmrs package. However, it is essential to approach such cases with caution and carefully select an optimal ridge tuning parameter. Moreover, for high-dimensional settings, the development of new methods is required.

To expand the scope of our package, we plan to include additional sub-distribution functions, such as the Generalized Gamma and Gompertz distributions, in the near future. For the Gamma and Generalized Gamma distributions, we will investigate closed-form solutions for parameter estimation based on the method of moments and implement them in the package.